Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

![Update 2] How to build and install TensorFlow GPU/CPU for Windows from source code using bazel and Python 3.6 | by Aleksandr Sokolovskii | Medium Update 2] How to build and install TensorFlow GPU/CPU for Windows from source code using bazel and Python 3.6 | by Aleksandr Sokolovskii | Medium](https://miro.medium.com/max/1024/1*kfAK2kFVuPv8h7hWXEdAHg.png)

Update 2] How to build and install TensorFlow GPU/CPU for Windows from source code using bazel and Python 3.6 | by Aleksandr Sokolovskii | Medium

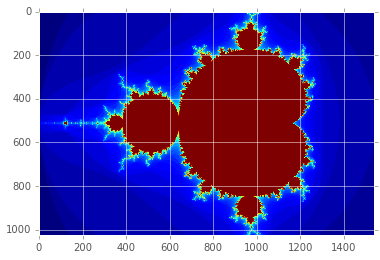

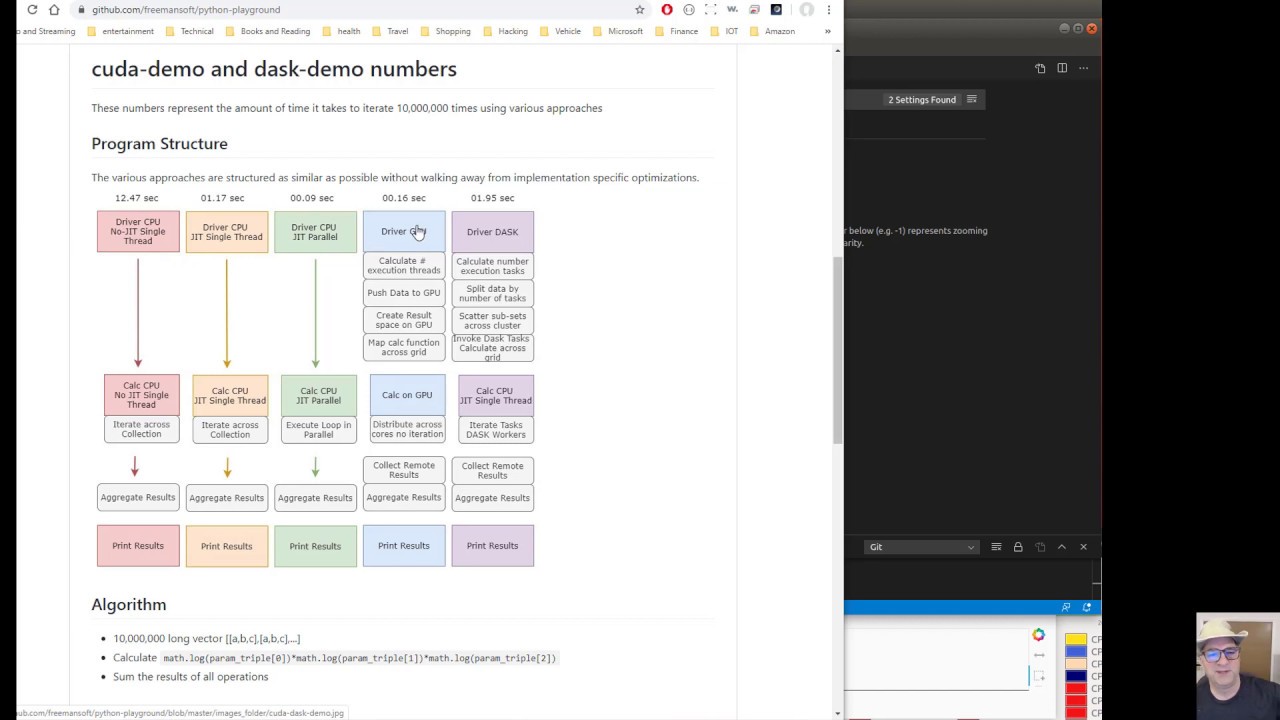

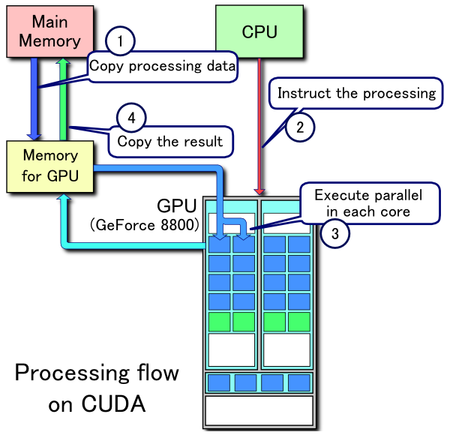

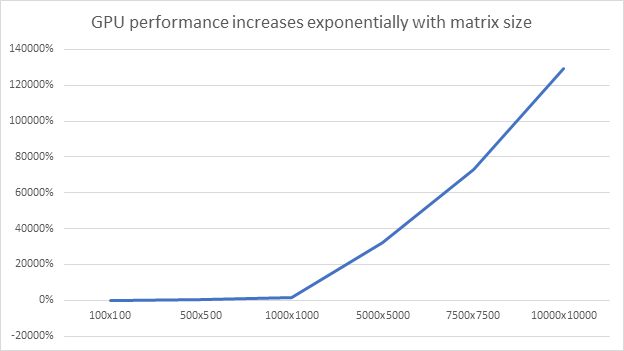

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

GitHub - PacktPublishing/Hands-On-GPU-Programming-with-Python-and-CUDA: Hands-On GPU Programming with Python and CUDA, published by Packt

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers