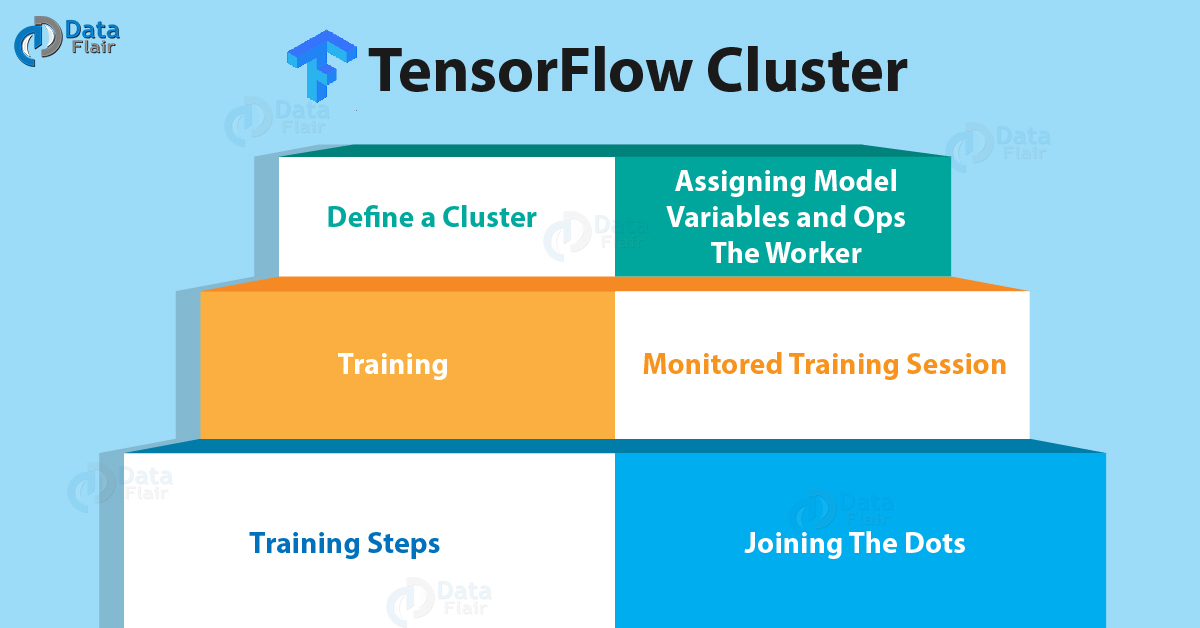

TensorFlow as a Distributed Virtual Machine - Open Data Science - Your News Source for AI, Machine Learning & more

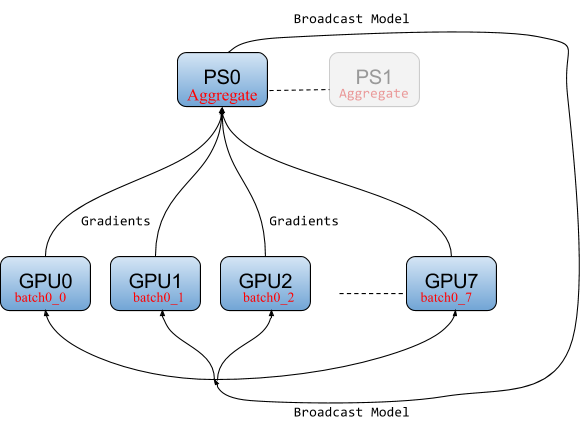

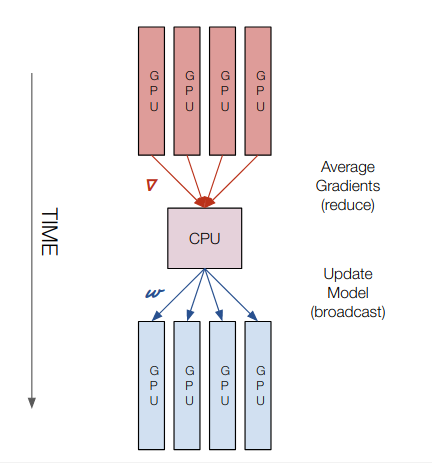

Launching TensorFlow distributed training easily with Horovod or Parameter Servers in Amazon SageMaker | AWS Machine Learning Blog

Distributed Deep Learning Training with Horovod on Kubernetes | by Yifeng Jiang | Towards Data Science

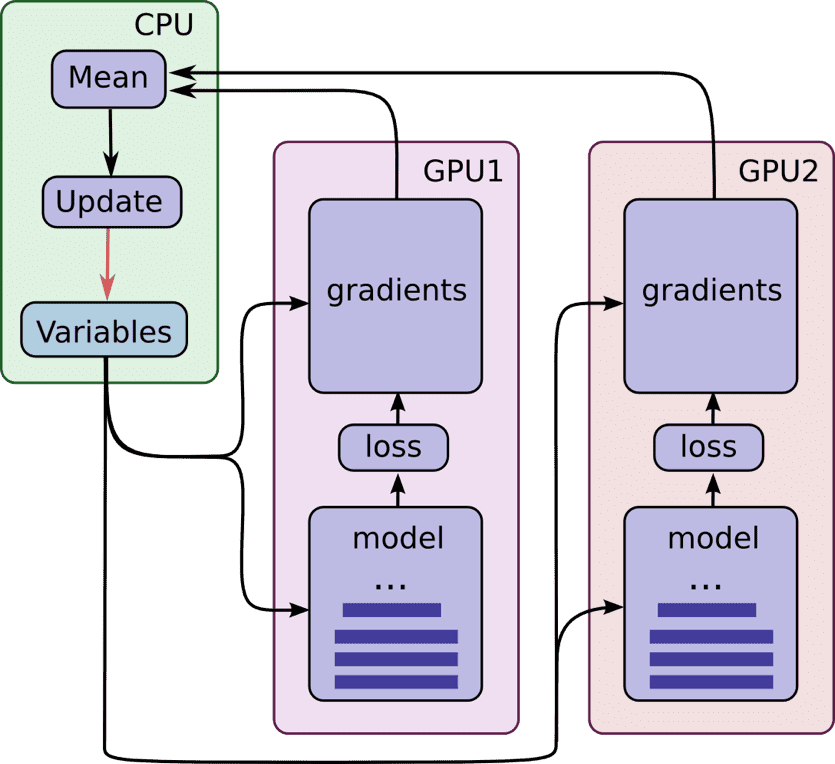

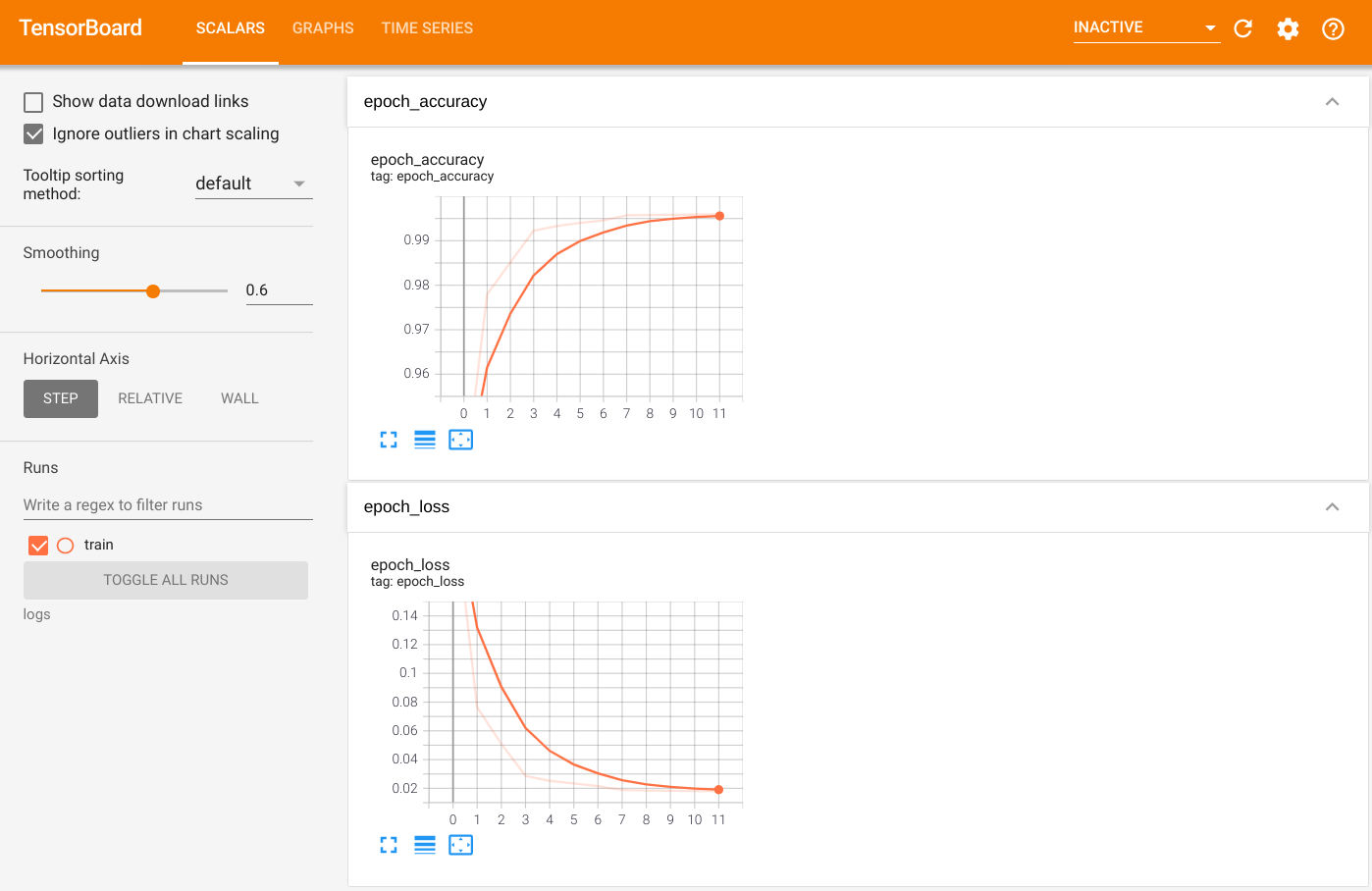

GitHub - sayakpaul/tf.keras-Distributed-Training: Shows how to use MirroredStrategy to distribute training workloads when using the regular fit and compile paradigm in tf.keras.